Elm Robots and Humans

Humans build websites using a vast amount of tools and technologies. Elm is a functional programing language that can build reliable websites. Robots crawl websites and gather useful information for search engines.

In this note we will try to understand and build a robots.txt file content and a humans.txt file content using Elm.

Humans are odd. They think order and chaos are somehow opposites and try to control what won't be. But there is grace in their failings.

-- Vision, Avengers: Age of Ultron

Robots.txt

Robots.txt is a public file in our websites where we can define policies, sitemaps and host name to let crawlers know where, when and what they can access and index in search engines.

A robots.txt file can look something like this:

User-agent: *

Allow: *

Sitemap: https://marcodaniels.com/sitemap.xml

Host: https://marcodaniels.com

This information will allow all robots (User-agent: *) to access all pages (Allow: *) in this website. It also points to where we can find the sitemap.

Policies

In our robots.txt we can define multiple policies for multiple user-agents.

In this example we can disallow the page /search just for the Googlebot and for Bingbot we disallow all pages in our website. At the end we make sure to allow all the other crawlers in all our pages.

User-agent: Googlebot

Disallow: /search

User-agent: Bingbot

Disallow: /

User-agent: *

Allow: *

Crawl-Delay

Crawl-delay informs crawlers to wait a specific amount of time (in milliseconds) before they can start crawling our pages.

User-agent: Googlebot

Crawl-delay: 120

Disallow: /search

This directive can be used per user-agent.

Clean-Param

Clean-param tells crawlers to remove parameter(s) from the URL's query string.

User-agent: *

Allow: *

Clean-param: id /user

This will remove the id parameter from the URL .../user?id=1234

Humans.txt

Humans.txt is a fun initiative where we can introduce the people behind our website, inform what technologies and standards we follow and provide acknowledgements and greetings for the humans who build the website.

/* Team */

Engineer: Marco Martins

/* Technology */

Elm, Terraform, Nix

The humans.txt file does not have a defined structure behind, and it can have many formats and different information as it is a file from humans to humans.

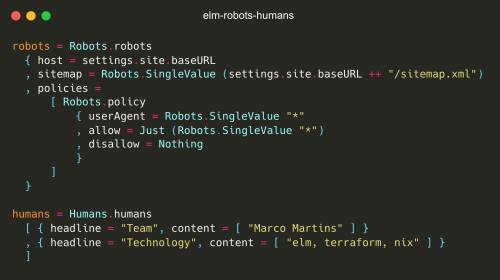

elm-robots-humans

elm-robots-humans is an Elm package that allows us to write robots.txt and humans.txt file contents in a structured and typed manner.

The Robots module exposes multiple functions and types that allows us to write policies per user-agent with all the needed directives.

robots: String

robots =

Robots.robots

{ sitemap = Robots.SingleValue "/sitemap.xml"

, host = "https://marcodaniels.com"

, policies =

[ Robots.policy

{ userAgent = Robots.SingleValue "*"

, allow = Just (Robots.SingleValue "*")

, disallow = Nothing

} |> Robots.withCrawlDelay 120

]

}

Because some properties (sitemap, userAgent, allow, disallow) can be single or multiple valued string entry we use a Value custom type that allows us to be more expressive about our policy needs. The example above will generate the following string:

User-agent: *

Allow: *

Crawl-delay: 120

Sitemap: /sitemap.xml

Host: https://marcodaniels.com

For the humans.txt side the Humans module allow us to write each "section" with just headline and content:

humans: String

humans =

Humans.humans

[ { headline = "Team"

, content = [ "Engineer: Marco Martins" ]

}

, { headline = "Technology"

, content = [ "Elm, Terraform, Nix" ]

}

]

Since the humans.txt content does not require much structure, this allows us to make sure we have an easy way to create humans content. The example would generate the string:

/* Team */

Engineer: Marco Martins

/* Technology */

Elm, Terraform, Nix

You're still here?

Thank you so much for reading this!

Go ahead and check the elm-robots-humans package and its source code in GitHub.

You can also see it in action in this website at /robots.txt and the respective source code in Github.

Until then!